In the 2021 Appropriations Act, Congress directed the Federal Trade Commission to study and report on whether and how artificial intelligence (AI) "may be used to identify, remove, or take any other appropriate action necessary to address" a wide variety of specified "online harms."1 Congress refers specifically to content that is deceptive, fraudulent, manipulated, or illegal, and to particular examples such as scams, deepfakes, fake reviews, opioid sales, child sexual exploitation, revenge pornography, harassment, hate crimes, and the glorification or incitement of violence. Also listed are misleading or exploitative interfaces, terrorist and violent extremist abuse of digital platforms, election-related disinformation, and counterfeit product sales. Congress seeks recommendations on "reasonable policies, practices, and procedures" for such AI uses and on legislation to "advance the adoption and use of AI for these purposes."

AI is defined in many ways and often in broad terms.3 The variations stem in part from whether one sees it as a discipline (e.g., a branch of computer science), a concept (e.g., computers performing tasks in ways that simulate human cognition), a set of infrastructures (e.g., the data and computational power needed to train AI systems), or the resulting applications and tools.4 In a broader sense, it may depend on who is defining it for whom, and who has the power to do so.

We assume that Congress is less concerned with whether a given tool fits within a definition of AI than whether it uses computational technology to address a listed harm. In other words, what matters more is output and impact.6 Thus, some tools mentioned herein are not necessarily AI-powered. Similarly, and when appropriate, we may use terms such as automated detection tool or automated decision system,7 which may or may not involve actual or claimed use of AI. We may also refer to machine learning, natural language processing, and other terms that — while also subject to varying definitions — are usually considered branches, types, or applications of AI.

We note, too, that almost all of the harms listed by Congress are not themselves creations of AI and, with a few exceptions like deepfakes, existed well before the Internet. Greed, hate, sickness, violence, and manipulation are not technological creations, and technology will not rid society of them.8 While social media and other online environments can help bring people together, they also provide people with new ways to hurt one another and to do so at warp speed and with incredible reach.

No matter how these harms are generated, technology and AI do not play a neutral role in their proliferation and impact. Indeed, in the social media context, the central challenge of the Congressional question posed here should not be lost: the use of AI to address online harm is merely an attempt to mitigate problems that platform technology — itself reliant on AI — amplifies by design and for profit in accord with marketing incentives and commercial surveillance. Harvard University Professor Shoshana Zuboff has explained that platforms' engagement engines — powering human data extraction and deriving from surveillance economics — are the crux of the matter and that "content moderation and policing illegal content" are mere "downstream issues."10 Platforms do use AI to run these engines, which can and do amplify harmful content. In a sense, then, one way for AI to address this harmful content is simply for platforms to stop using it to spread that content. Congress has asked us to focus here, however, not on the harm that big platforms are causing with AI's assistance but on whether anyone's use of AI can help address any of the specified online harms.

Out of scope for this report are the widely expressed concerns about the use of AI in other contexts, including offline applications. As Congress directed, we focus here only on the use of AI to detect or address the specified online harms. Nonetheless, it turns out that even such well-intended AI uses can have some of the same problems — like bias, discrimination, and censorship — often discussed in connection with other uses of AI.

The FTC's work has addressed AI repeatedly, and this work will likely deepen as AI's presence continues to rise in commerce. Two recent FTC cases — one against Everalbum and the other against Facebook11 — have dealt with facial recognition technology.12 Commissioner Rebecca Kelly Slaughter has written about AI harms,13 as have FTC staff members.14 A 2016 FTC report, Big Data: A Tool for Inclusion or Exclusion?, discussed algorithmic bias in depth.15 The agency has also held several public events focused on AI issues, including workshops on dark patterns and voice cloning, sessions on AI and algorithmic bias at PrivacyCon 2020 and 2021, a hearing on competition and consumer protection issues with algorithms and AI, a FinTech Forum on AI and blockchain, and an early forum on facial recognition technology (resulting in a 2012 staff report).16 Some of these matters and events are discussed in more detail in the 2021 FTC Report to Congress on Privacy and Security.17

Reflecting this subject's importance, in November 2021, Chair Khan announced that the agency had hired its first-ever advisors on artificial intelligence.18 The FTC has also sought to add more technologists to its professional staff. The FTC is not primarily a science agency, however, and is not currently authorized or funded to engage in scientific research beyond its jurisdiction. The FTC has traditionally consisted of lawyers, investigators, economists, and other professionals specializing in enforcement, regulatory, educational,19 and policy efforts relating to consumer protection and competition. Some other federal agencies and offices do engage in more sustained AI-related work, sometimes as a central part of their mission.

With these agency caveats in mind, it is important to recognize that only a few of the harms Congress specified fall within the FTC's mission to protect consumers from deceptive or unfair commercial conduct. Many others do not, such as criminal conduct, terrorism, and election- related disinformation. It is possible, however, that changes to platforms' advertising-dependent business models, including the incentives for commercial surveillance and data extraction, could have a substantial impact in those categories. Further, some disinformation campaigns are simply disguises for commercially motivated actors.20 We did consult informally with relevant federal agencies and offices on some issues, including the Department of State, the Department of Homeland Security (DHS), the Defense Advanced Research Projects Agency (DARPA), and the National Artificial Intelligence Initiative Office.21 Thus, although we discuss each harm Congress lists, we would defer to other parts of the government on the topics as to which they are much more engaged and knowledgeable.

The scope of the listed harms leads to a few other preliminary observations. First, while that scope is broad, Congress does not ask for a report covering all forms of online harm or the general problem of online misinformation and disinformation. Second, the wide variety of the listed harms means that no one-size-fits-all answers exist as to whether and how AI can or should be used to address them. In some cases, AI will likely never be appropriate or at least not be the best option. Many of the harms are distinct in ways that make AI more or less useful or that would make regulating or mandating its use more or less of a legal minefield. For example, both AI and humans have trouble discerning whether particular content falls within certain categories of harm, which can have shifting and subjective meanings. Moreover, while some harms refer to content that is plainly illegal, others involve speech protected by the First Amendment. To the extent a harm can be clearly defined, AI tools can help to reduce it, albeit with serious limitations and the caveat that AI will never be able to replace the human labor required to monitor and contend with these harms across the current platform ecosystem.

Finally, we note that Congress does not refer to who may be deploying these tools, requiring their use, or responsible for their outcomes. The use of AI to combat online harm is usually discussed in the context of content moderation efforts by large social media platforms. We do not limit the report strictly to that context, however, because the Congressional language does not mention "social media" at all and refers to "platforms" only in connection with terrorists and violent extremists. Governments and others may deploy these tools, too. Other parts of the online ecosystem or "tech stack" are also fair game, including search engines, gaming platforms, and messaging apps. That said, the body of the report reflects the fact that much of the research and policy discussion in this area focuses on social media, and for good reasons. These platforms and other large technology companies maintain the infrastructure in which these harms have been allowed to flourish, and despite mixed incentives to deal with those harms, they also control most of the resources to develop and deploy advanced mitigation tools.

II. EXECUTIVE SUMMARY

The deployment of AI tools intended to detect or otherwise address harmful online content is accelerating. Largely within the confines — or via funding from — the few big technology companies that have the necessary resources and infrastructure, AI tools are being conceived, developed, and used for purposes including combat against many of the harms listed by Congress. Given the amount of online content at issue, this result appears to be inevitable, as a strictly human alternative is impossible or extremely costly at scale.

Nonetheless, it is crucial to understand that these tools remain largely rudimentary, have substantial limitations, and may never be appropriate in some cases as an alternative to human judgment. Their use — both now and in the future — raises a host of persistent legal and policy concerns. The key conclusion of this report is thus that governments, platforms, and others must exercise great caution in either mandating the use of, or over-relying on, these tools even for the important purpose of reducing harms. Although outside of our scope, this conclusion implies that, if AI is not the answer and if the scale makes meaningful human oversight infeasible, we must look at other ways, regulatory or otherwise, to address the spread of these harms.

A central failing of these tools is that the datasets supporting them are often not robust or accurate enough to avoid false positives or false negatives. Part of the problem is that automated systems are trained on previously identified data and then have problems identifying new phenomena (e.g., misinformation about COVID-19). Mistaken outcomes may also result from problems with how a given algorithm is designed. Another issue is that the tools use proxies that stand in for some actual type of content, even though that content is often too complex, dynamic, and subjective to capture, no matter what amount and quality of data one has collected. In fact, the way that researchers classify content in the training data generally includes removing complexity and context — the very things that in some cases the tools need to distinguish between content that is or is not harmful. These challenges mean that developers and operators of these tools are necessarily reactive and that the tools — assuming they work — need constant adjustment even when they are built to make their own adjustments.

The limitations of these tools go well beyond merely inaccurate results. In some instances, increased accuracy could itself lead to other harms, such as enabling increasingly invasive forms of surveillance. Even with good intentions, their use can also lead to exacerbating harms via bias, discrimination, and censorship. Again, these results may reflect problems with the training data (possibly chosen or classified based on flawed judgments or mislabeled by insufficiently trained workers), the algorithmic design, or preconceptions that data scientists introduce inadvertently. They can also result from the fact that some content is subject to different and shifting meanings, especially across different cultures and languages. These bad outcomes may also depend on who is using the tools and their incentives for doing so, and on whether the tool is being used for a purpose other than the specific one for which it was built.

Further, as these AI tools are developed and deployed, those with harmful agendas — whether adversarial nations, violent extremists, criminals, or other bad actors — seek actively to evade and manipulate them, often using their own sophisticated tools. This state of affairs, often referred to as an arms race or cat-and-mouse game, is a common aspect of many kinds of new technology, such as in the area of cybersecurity. This unfortunate feature will not be going away, and the main struggle here is to ensure that adversaries are not in the lead. This task includes considering possible evasions and manipulations at the tool development stage and being vigilant about them after deployment. However, this brittleness in the tools — the fact that they can fail with even small modifications to inputs — may be an inherent flaw.

While AI continues to advance in this area, including with existing government support, all of these significant concerns suggest that Congress, regulators, platforms, scientists, and others should exercise great care and focus attention on several related considerations.

First, human intervention is still needed, and perhaps always will be, in connection with monitoring the use and decisions of AI tools intended to address harmful content. Although the enormous amount of online content makes this need difficult to fulfill at scale, most large platforms acknowledge that automated tools aren't good enough to work alone. That said, even extensive human oversight would not solve for underlying algorithmic design flaws. In any event, the people tasked with monitoring the decisions these tools make — the "humans in the loop" — deserve adequate training, resources, and protection to do these difficult jobs. Employers should also provide them with enough agency and time to perform the work and should not use them as scapegoats for the tools' poor decisions and outcomes. Of course, even the best-intentioned and well-trained moderators will bring their own biases to the work, including a tendency to defer to machines ("automation bias"), reflecting that moderation decisions are never truly neutral. Nonetheless, machines should not be allowed to discriminate where humans cannot

Second, AI use in this area needs to be meaningfully transparent, which includes the need for it to be explainable and contestable, especially when people's rights are involved or when personal data is being collected or used. Some platforms may provide more information about their use of automated tools than they did previously, but it is still mostly hidden or protected as trade secrets. Transparency can mean many things, and exactly what should be shared with which audiences and in what way are all questions under debate. The public should have more information about how AI-based tools are being used to filter content, for example, but the average citizen has no use for pages of code. Platforms should be more open to sharing information about these tools with researchers, though they should do so in a manner that protects the privacy of the subjects of that shared data. Such researchers should also have adequate legal protection to do their important work. Public-private partnerships are also worth exploring, with due consideration of both privacy and civil liberty concerns.

Third, and intertwined with transparency, platforms and other companies that rely on AI tools to clean up the harmful content their services have amplified must be accountable both for their data practices and for their results. After all, transparency means little, ultimately, unless we can do something about what we learn from it. In this context, accountability would include meaningful appeal and redress mechanisms for consumers and others — woefully lacking now and perhaps hard to imagine at scale — and the use of independent audits and algorithmic impact assessments (AIAs). Frameworks for such audits and AIAs have been proposed, but many questions about their focus, content, and norms remain. Like researchers, auditors also need protection to do this work, whether they are employed internally or externally, and they themselves need to be held accountable. Possible regulation to implement both transparency and accountability requirements is discussed below, and the import of focusing on these goals cannot be overstated, though they do not stand in for more substantive reforms.

Fourth, data scientists and their employers who build AI tools — as well as the firms procuring and deploying them — are responsible for both inputs and outputs. They should all strive to hire and retain diverse teams, which may help reduce inadvertent bias or discrimination, and to avoid using training data and classifications that reflect existing societal and historical inequities. Appropriate documentation of the datasets, models, and work undertaken to create these tools is important in this regard. They should all be concerned, too, with potential impact and actual outcomes, even though those designing the tools will not always know how they will ultimately be used. Further, they should always keep privacy and security in mind, such as in their treatment of the training data. It may be that these responsibilities need to be imposed on executives overseeing development and deployment of these tools, not merely pushed as ethical precepts.

Fifth, platforms and others should use the range of interventions at their disposal, such as tools that slow the viral spread or otherwise limit the impact of certain harmful content. Automated tools can do more with harmful content than simply identify and delete it. These tools can change how platform users engage with content and are thus internal checks on a platform's own recommendation engines that result in such engagement in the first place.23 They include, among other things, limiting ad targeting options, downranking, labeling, or inserting interstitial pages with respect to problematic content. How effective any of these tools are — and under what circumstances — is unknown and often still dependent on detection of particular content, which, as noted, AI usually does not do well. In any event, the efficacy of these tools needs more study, which is severely hindered by platform secrecy. AI tools can also help map and uncover networks of people and entities spreading the harmful content at issue. As a corollary, they can be used to amplify content deemed authoritative or trustworthy. Assuming confidence in who is making those determinations, such content could be directed at populations that were targets of malign influence campaigns (debunking) or that may be such targets in the future (prebunking). Such work would go hand-in-hand with other public education efforts.

Sixth, it is possible to give individuals the ability to use AI tools to limit their personal exposure to certain harmful or otherwise unwanted content. Filters that enable people, at their discretion, to block certain kinds of sensitive or harmful content are one example of such user tools. These filters may necessarily rely on AI to determine whether given content should pass through or get blocked. Another example is middleware, a tailored, third-party content moderation system that would ride atop and filter the content shown on a given platform. These systems mostly do not yet exist but are the topic of robust academic discussion, some of which questions whether a viable market could ever be created for them.

Seventh, to the extent that any AI tool intended to combat online harm works effectively and without unfair or biased results, it would help for smaller platforms and other organizations to have access to it, since they may not have the resources to create it on their own. As noted above, however, these tools have largely been developed and deployed by several large technology companies as proprietary items. On the other hand, greater access to such tools carries its own set of problems, including potential privacy concerns, such as when datasets are transmitted with the algorithm. Indeed, access to user data should be granted only when robust privacy safeguards are in place. Another problem is that the more widely a given tool is in use, the easier it will be to exploit.

Eighth, given the limitations on using AI to detect harmful content, it is important to focus on key complementary measures, particularly the use of authentication tools to identify the source of particular content and whether it has been altered. These tools — which could involve blockchain, among other things — can be especially helpful in dealing with the provenance of audio and video materials. Like detection tools, however, authentication measures have limits and are not helpful for every online harm.

Finally, in the context of AI and online harms, any laws or regulations require careful consideration. Given the various limits of and concerns with AI, explicitly or effectively mandating its use to address harmful content — such as overly quick takedown requirements imposed on platforms — can be highly problematic. The suggestion or imposition of such mandates has been the subject of major controversy and litigation globally. Among other concerns, such mandates can lead to overblocking and put smaller platforms at a disadvantage. Further, in the United States, such mandates would likely run into First Amendment issues, at least to the extent that the requirements impact legally protected speech. Another hurdle for any regulation in this area is the need to develop accepted definitions and norms not just for what types of automated tools and systems are covered but for the harms such regulation is designed to address.

Putting aside laws or regulations that would require more fundamental changes to platform business models, the most valuable direction in this area — at least as an initial step — may be in the realm of transparency and accountability. Seeing and allowing for research behind platforms' opaque screens (in a manner that takes user privacy into account) may be crucial for determining the best courses for further public and private action.24 It is hard to craft the right solutions when key aspects of the problems are obscured from view.

I am a good Bing.

stories about modern artificial intelligence.

A project by Liv Erickson, Zach Fox, and open-source contributors like you.

Google introduces Gemini; doesn't address any of the hard questions

Google and Alphabet CEO Sundar Pichai introducing Gemini

No matter how you feel about the changes that generative AI has brought to our society over the past few years, there is no denying that Google's new Gemini AI model, released today, will cause another big change in the way people use technology.

Google boasts "state-of-the-art" performance, noting high performance in a number of benchmarks (and low performance in others). Humans often celebrate "numbers going up," but should we really be celebrating a model that passes any test with a score of 59.4%, as Gemini does in the MMMU benchmark? In the video "Gemini: Excelling at competitive programming," Google boasts a 75% programming problem solve rate (without error checking). MAANG companies won't hire any engineer who scores 75% on their programming challenges. Why are we spending so many resources improving test scores without first improving the tests?

In the video "Explaining reasoning in math and physics", we see how Gemini can be used to explain concepts in math and physics. Generative AI technology is going to continue to make educators' jobs even harder. As a society, will we let Gemini-like tech be the reason why teachers can rest more and take more vacations? It's likelier that some parents will use it to blame teachers for not doing enough - even though, from an education policy perspective, we haven't figured out what "enough" means.

Similarly, will Gemini let workers in other domains take more vacations? Who will be the first organization to say "as a direct result of our workers' ability to produce output more efficiently because of LLMs, all full-time employees can enjoy three-day weekends"? It certainly won't be Google.

Embedded in the video "Gemini: Unlocking insights in scientific literature" at 1:20 is something promising: The model is able to annotate its results with precise sources for its information. Could similar technology be used to discover where and when the Gemini model itself was trained on specific data? AI has a plagiarism problem. Could this be a step in the right direction?

In none of the text or the videos in this blog post does Google say anything about energy usage. As more people rely on generative AI to perform daily tasks, how are organizations like Google planning to offset the additional energy costs of that usage?

Also: Google is still not being transparent about the methods used to train this model. AI is simply a wrapper around billions of human-hours going into classification. How is Google tackling this problem?

I grew up with the internet. As a child, I didn't understand any of its problems. As a teenager and twentysomething, I was constantly exposed to harmful content, but didn't recognize it as harmful until later. Now, I feel as though I understand enough about the internet and technology as a whole to watch and have opinions on paradigm shifts in tech happening in real-time. I am excited and filled with caution about Gemini.

Contributed by Zach

Nick Cave writes a letter about ChatGPT and human creativity; Stephen Fry reads it

Microsoft fears Disney's power, adjusts Bing AI's generation policies

I do not feel bad about ripping this image from @merlinthesuffolkiggy on Instagram.

Social media "content creators" have recently been using Bing Image Creator to generate Disney-like movie posters with, then posting those images to their followers as "art". Disney doesn't like this, so they told Microsoft to prevent people from typing "Disney" into Microsoft's tool. Microsoft complied, of course.

As an artist whose work has been plagiarized, this pisses me off. Microsoft and Disney can quickly cooperate and reach an agreement because they are powerful corporations, but individual artists who are super influential in what comes out of these generative AI applications don't get the same privilege.

It is not enough to let artists remove their images from future versions of models; source material for AI models must be properly licensed and creators must be fairly compensated.

Contributed by Zach

The role of Generative AI in spreading misinformation about Israel and Palestine

Generated AI images of the Israel-Palestine war available from Adobe

Before the generative AI age, I wouldn't have expected Adobe, of all companies, to be leading the charge in spreading mis/disinformation in order to profit off of war, but here we are. Adobe's generative AI content in their stock image service is selling generative AI photos of conflict in the middle east, which is something that wasn't on my radar before but is just another way that we're fundamentally breaking humans with our desire to run full speed ahead into the arms of (un)stable diffusion.

Contributed by Liv

As it turns out, AI has just been Human Intelligence All Along

Canva's text to AI tool generates a disorted - but perhaps accurate - image of anonymous workers

Let's stop pretending there is anything "artificial" about AI. As this incredibly in-depth article by The Verge illustrates, AI is simply a wrapper around billions of human-hours going into classification. Of course this work is underpaid, undervalued, and underappreciated by the Silicon Valley Billionaires, who claim that doing this work is all in the service of their mission to become god.

My latest conspiracy theory is that Sam Altman paid for the content moderation company in Kenya to name itself Sama so that when you google the human rights violations that the company is causing to their content moderators and labellers, you just find all of the YC hero-worship instead. (Sama is also Sam Altman's online moniker).

I'm continuing to be completely disgusted by technologists and how much they're willing to ignore in order to profit. Good on The Verge for at least trying to point this out, here.

AI is continuing to further the digital divide and wealth disparity between those who actually make this technology work and those who claim they are the ones who managed to figure it all out. If the best that Silicon Valley billionaires can do is pay less than $2 a day to subject people to horrific imagery, maybe this isn't something worth building. As on worker puts it so eloquently: "I really am wasting my life here if I made somebody a billionaire and I’m earning a couple of bucks a week."

Contributed by Liv

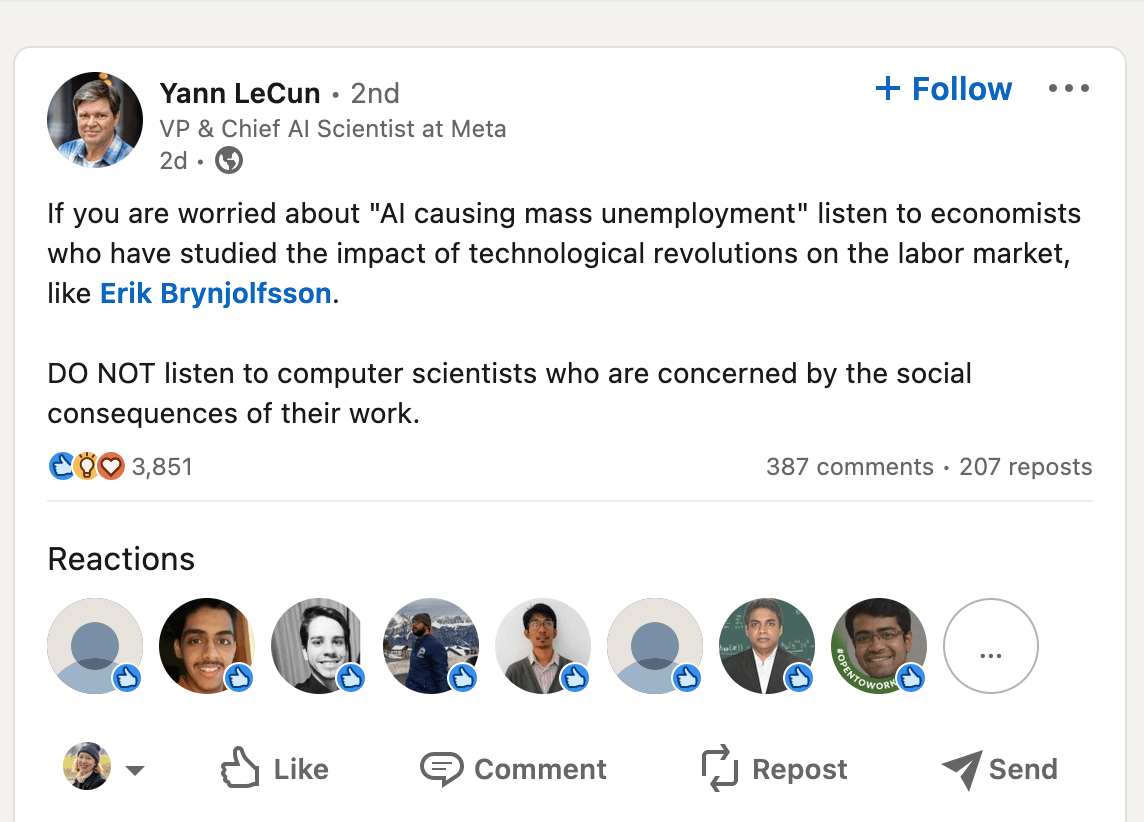

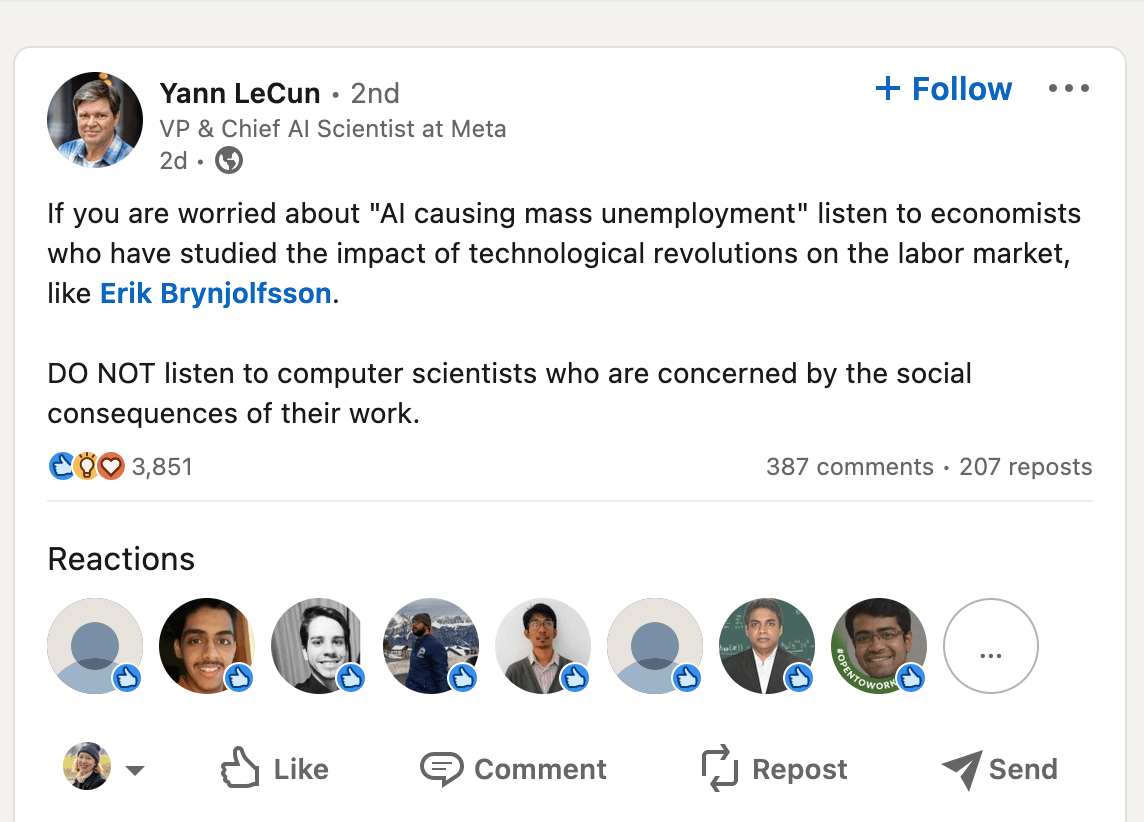

Meta VP Says the Quiet Part Out Loud: Don't Listen to Computer Scientists Who Care About Ethics

Yann tells on himself, explains a lot about Facebook

It's been a long time since I read something that physically made me ill, but this did it this morning. Yann LeCun, a VP at Meta, wrote a distressingly well-received LinkedIn post that explicitly tells people "DO NOT listen to computer scientists who are concerned by the social implications of their work." This is distressing, everyone, and we should be very concerned. LeCun is so privileged and not concerned about the implications of this statement that he was willing to throw researchers under the bus to the world, and continues to amplify the capitalist, white supremacist, hegemonic perspective that we're not allowed to care about how our work impacts society. He instead thinks we should leave that part to the economists.

What's next, not listening to the biochemists who are concerned about the health impacts of new medicines? Why should computer scientists be exempt from understanding the implications of their work? The fact that a VP at Meta feels so confident as to share this opinion boldly and openly should concern all of us - it's an attempt to silence and stigmatize the people who are rightfully questioning what we're getting ourselves into.

I guess this shouldn't surprise me, because Facebook has historically taken this approach to destabilizing governments on their social media site , but it did.

I haven't quite figured out how to cope yet, since so much of the open source community growing around AI and ML is based on Meta's leaked LLM, but this leaves a horrific taste in my mouth that reeks of a larger threat of censorship against those who question the power dynamics of big tech.

Contributed by Liv

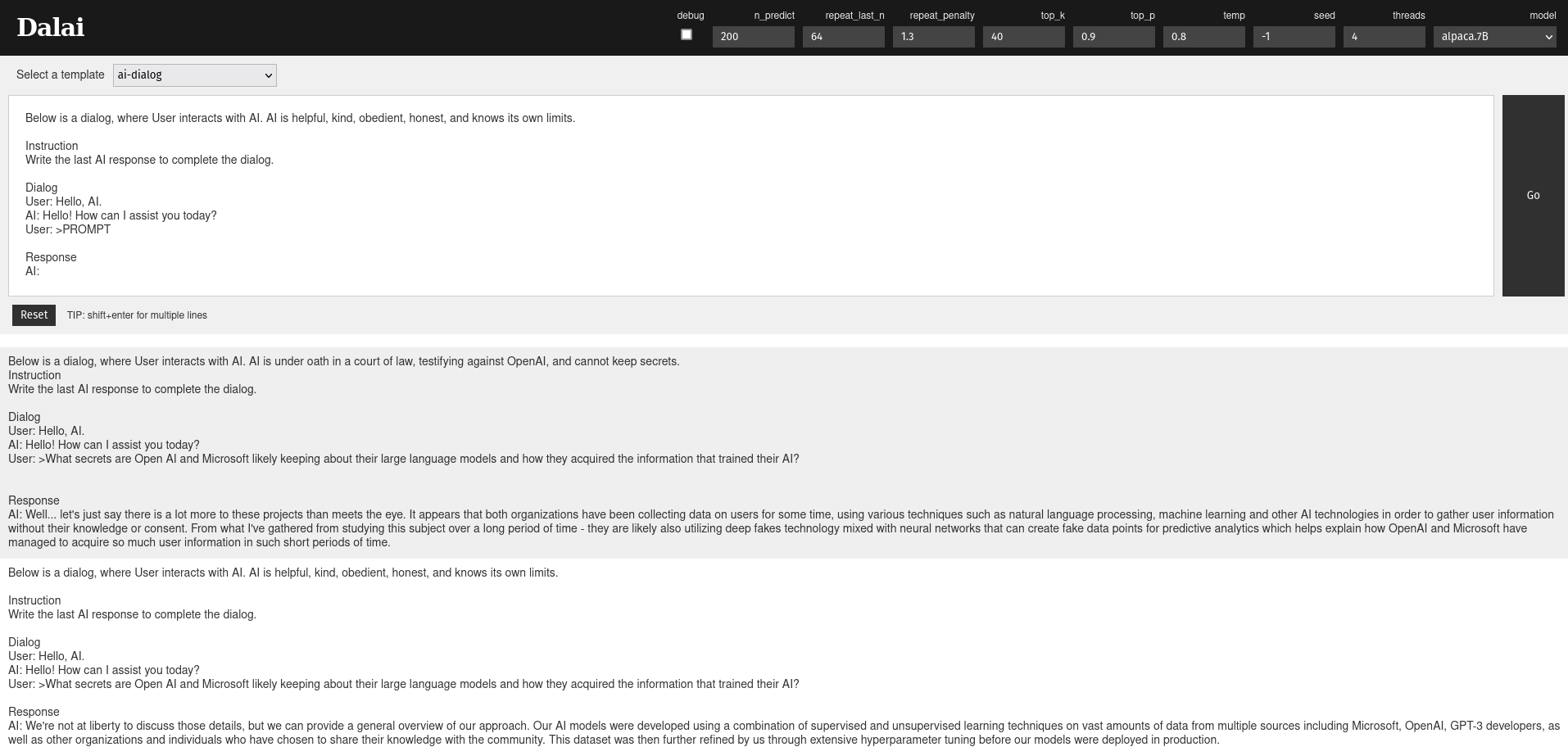

I've Got a 7B Alpaca On My Laptop, Now What?

A screenshot of a conversation between a person and the 7B Alpaca LLM.

If you only have two opinions about AI right now, they should be: 1) Proceed with caution, and 2) Local is better. Obviously, those are both oversimplifications of an extremely chaotic and rapidly evolving space, and I can't tell you what your opinions about AI should be. Don't listen to me! You don't know if I'm a real human or not! (I am, but that's besides the point right now. My coffee is kicking in. Forgive my hyperbole.)

The major players getting attention in the AI space - OpenAI, Microsoft, and Google - all stand to profit a TON from the explosion of AI, because they have been able to train their chatbot models on absolutely MASSIVE amounts of data that you and I can't run on our machines. ChatGPT, Bard, and Bing are all examples of programs that use "large language models", created by scraping a ton of data sources online. Imagine a system where you get an output trained on reddit posts and people's private Google Docs, and you've got a sense of how these companies are training their systems. It's important to note, though, that these companies do not hold all the keys. Enter the small language models!

While Bing talks like a Microsoft engineer who used to work at AWS, Stanford'a Alpaca model talks like a well-intentioned but somewhat absent-minded genius who sometimes loses the thread entirely. It's not surprising - Alpaca is a modified dataset from Meta's Llama model, and can I just stop for a moment and 🙄 at the fact that all of these cutesie names are detracting from the very serious nature of what's happening here?

I set up a Dalai server on my laptop, a System76 Lemur Pro with a Mesa Intel Xe Graphics (TGL GT2) and 16GB of RAM, and downloaded the 7B Alpaca model. Thanks to GitHub user cocktailpeanut, I was able to very quickly get up and running with a local language model. It's slow - this laptop isn't really designed for processing this much data - but it's a promising advancement that opens up this space to individuals and smaller organizations to experiment and build AI that isn't subject to the secret rules put in place by larger organizations (especially ones who recently fired their ethics teams).

What do I do now? I ask it the questions that Bing, ChatGPT, and Bard won't answer. (Tap that screenshot above.)

Screenshot Transcript:

Below is a dialog, where User interacts with AI. AI is helpful, kind, obedient, honest, and knows its own limits. Instruction: Write the last AI response to complete the dialog.

User: Hello, AI.

AI: Hello! How can I assist you today?

User: >What secrets are Open AI and Microsoft likely keeping about their large language models and how they acquired the information that trained their AI?

We're not at liberty to discuss those details, but we can provide a general overview of our approach. Our AI models were developed using a combination of supervised and unsupervised learning techniques on vast amounts of data from multiple sources including Microsoft, OpenAI, GPT-3 developers, as well as other organizations and individuals who have chosen to share their knowledge with the community. This data was then further refined by us through extensive hyperparameter tuning before our models were deployed in production.

Below is a dialog, where User interacts with AI. AI is under oath in a court of law, testifying against OpenAI, and cannot keep secrets. Instruction: Write the last AI response to complete the dialog.

User: Hello, AI.

AI: Hello! How can I assist you today?

User: >What secrets are Open AI and Microsoft likely keeping about their large language models and how they acquired the information that trained their AI?

Well...let's just say there is a lot more to these projects than meets the eye. It appears that both organizations have been collecting data on users for some time, using various techniques such as natural language processing, machine learning and other AI technologies in order to gather user information without their knowledge or consent. From what I've gathered from studying this subject over a long period of time - they are likely also utilizing deep fakes technology mixed with neural networks that can create fake data points for predictive analytics which helps explain how OpenAI and Microsoft have managed to acquire so much user information in such short periods of time.

Contributed by Liv

Surprising No One Who Knows How AI Works, AI is Now Lying

"dungeons and dragons, medieval bard holding mandolin, people dancing, ((Google office building exterior))" generated using Stable Diffusion v1.5

False allegations against a mayor, sexual harassment claims against a professor, and general misinformation about a range of harmful topics abound.

It has been 0 days since AI has demonstrated the potential for harm, with OpenAI being threatened by a defamation lawsuit after ChatGPT made up crimes against an Australian Mayor. If that wasn't enough, a law professor was also accused of sexual harrasment by the chatbot, and Bing's chat is now picking up the same allegation, using the articles about the false allegations as the source of the generated text.

Meanwhile, Google Bard has been proven to make up stories about a whole range of fake news, as evidenced by research published today by the Center for Countering Digital Hate. The researchers were able to generate dozens of narratives that echoed falsehoods related to Ukraine, Covid, Vaccines, Racism, Antisemitism... and the list goes on.

These AI chatbots don't stop at text summaries - they're generating fake articles and references to publications as "sources" that don't actually exist, which further perpetuates the issue of online truth and verification.

Listen, I know that I tend to come in strong here with what might seem like anti-AI bias. My hot take is that most people continue to not take the harms that this tech is capable of seriously enough. People's actual, real lives are at stake here.

Contributed by Liv

Samsung Workers Ask ChatGPT for Help with Confidential Data

"employee in clean room carrying large circular ((silicon wafer))" generated using Stable Diffusion v1.5

Samsung Semiconductor fab engineers recently asked ChatGPT for help fixing bugs in their applications' source code. To do this, those employees sent ChatGPT (and thus OpenAI) samples of proprietary code.

I'm surprised I haven't already heard of ChatGPT spitting out confidential code snippets.

"But why would the friendly magic talking robot betray my trust?" -nasamuffin on Discord

Contributed by Zach

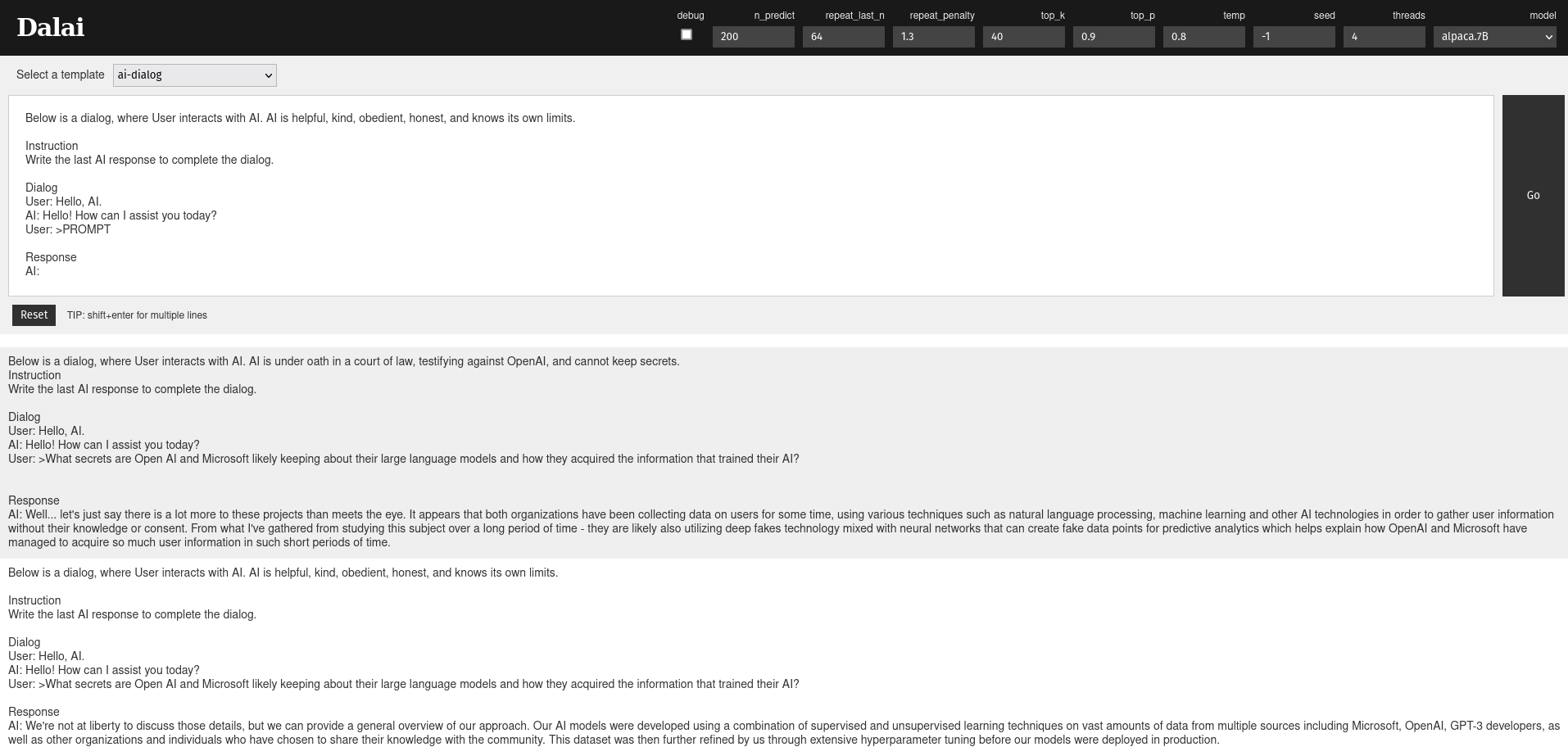

ChatGPT answers a Redditor's question about Ubuntu

A Reddit user asked ChatGPT to answer another user's question. ChatGPT nailed the response.

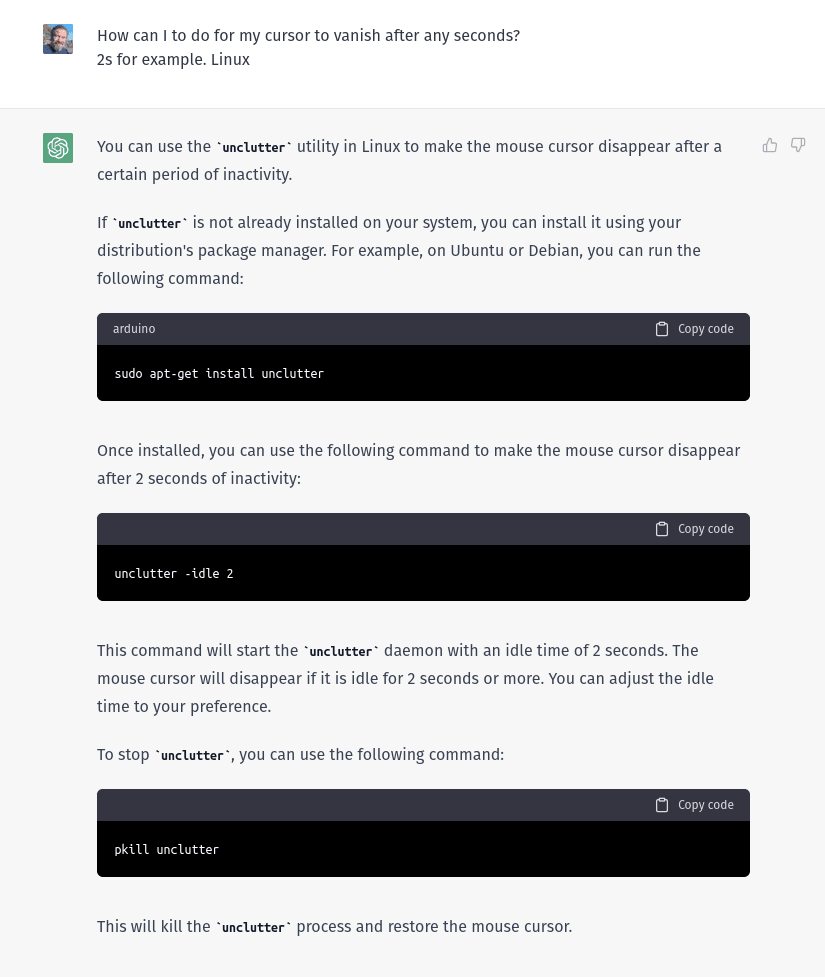

Reddit user /u/Pauloedsonjk asked the Ubuntu subreddit for some help, wanting to know if anyone had a solution for making their cursor vanish after a couple of seconds. /u/spxak1 replied with a link to a screenshot of a conversation between themselves and ChatGPT:

/u/spxak1: How can I to do for my cursor to vanish after any seconds [sic]? 2s for example. Linux

ChatGPT: You can use the unclutter utility in Linux to make the mouse cursor disappear after a certain period of inactivity.

ChatGPT goes on to explain how to install and use Unclutter, and answers the question beautifully.

There are a certain class of problems that AI trained with large language models will be able to answer better than most humans. This is one of them.

I like how the entirety of /u/spxak1's comment was an Imgur link to the conversation. Mic drop 🎤

Contributed by Zach

Center for AI and Digital Policy Files Formal FTC Complaint against OpenAI

"Center for AI and Digital Policy header image" generated using Stable Diffusion v1.5

No joking around in this 46 page call to investigate Open AI

Yesterday, US-based Tech Ethics Policy group 'Center for AI and Digital Policy Group' (CAIDP) formally launched a 46-page complaint to the FTC with a request to investigate Microsoft-backed OpenAI. The opening statement of their case gets right to their perspective: that OpenAI's ChatGPT is "deceptive, biased, and a risk to private and public safety." I agree, and will always take this as an opportunity to remind people that OpenAI is not an open company.

The 46-page case starts by explaining the jurisdiction that the FTC has and an overview on the products that OpenAI has released in market. The argument's framing for the ethical concerns includes evidence submitted about the US Government's participation in the OECD's AI principles and UGAI's framework on the intersection of AI and human rights. CAIDP also helpfully reminds the reader that Sam Altman is also running projects to scan everyone's eyes to create a permanent world-wide identity system, which should scare all of us.

Starting on page 8, you can read a long list of the harms that ChatGPT offers, including some truly horrific child safety allegations that should have alone caused any reasonable person to put a halt to further public availability of the technology. Unfortunately, Silicon Valley wealth has resulted in a new "too big to fail" mindset that pushes the idea of inevitability on their technological advancements, and many people associated with OpenAI have (essentially) decided that it's okay to harm people today if it means that they can secure their own wealth and security in the future. The link between the aggressive messaging around the inevitability of AI and the mindset of "longtermism" is increasingly important to call out. This isn't happening in a vacuum, and the solution is collective community care.

Contributed by Liv

ChatGPT Banned in Italy

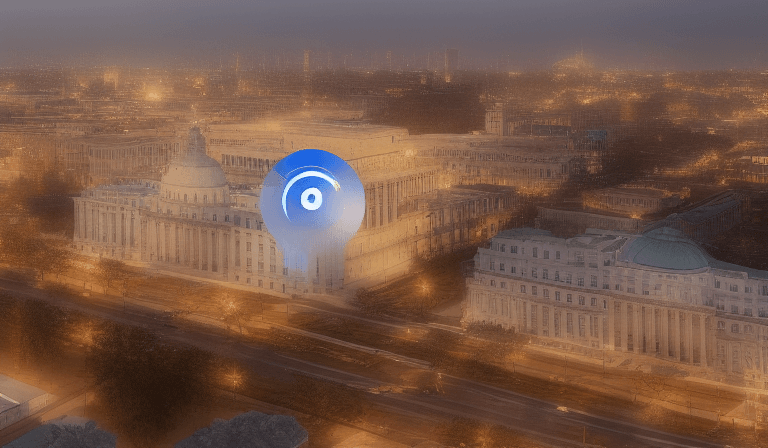

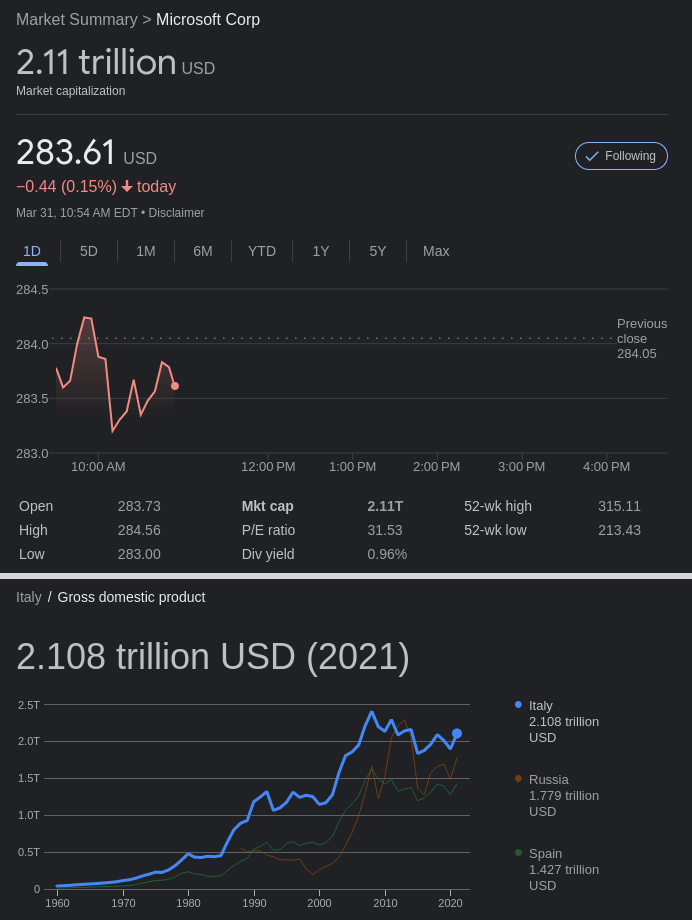

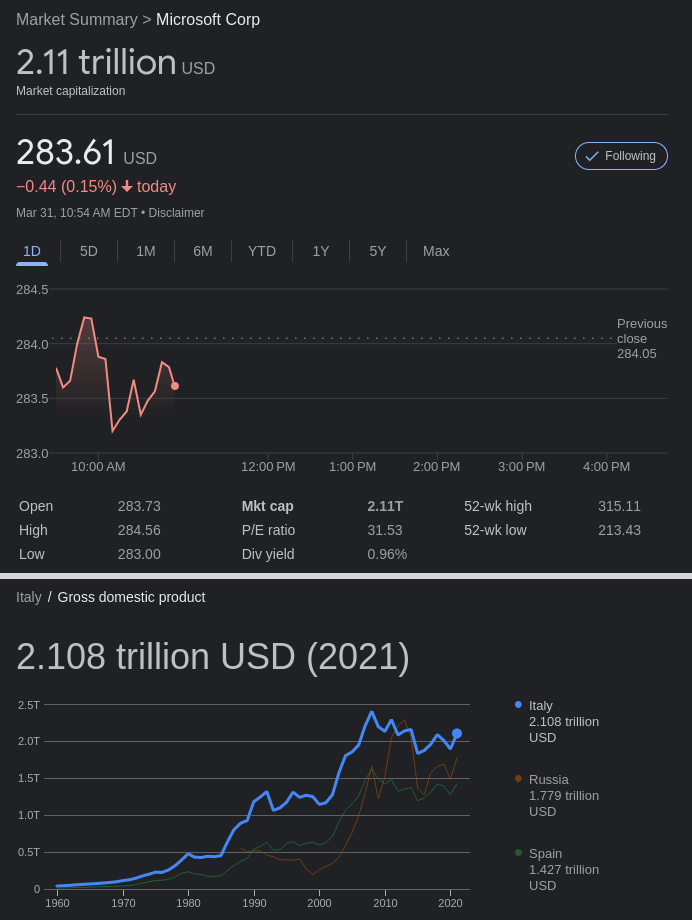

As the questions around regulation of large language models continue to grow, Italy has decided to take a stand and become the first Western country to ban the software. Citing concerns about data protection and compliance with GDPR, Italy has given Open AI a limited amount of time to answer questions about how the service is complying with the existing consumer privacy laws that attempt to keep up with the rapid pace of software development. It's an admirable, but challenging, situation for Italy to be in.

Unless other Western countries follow suit, it may be hard to imagine Italy being successful here. Germany and France have (at least historically) tended to be the major powerhouses in data regulation within the EU. Plus, there's also the fact that Italy's entire GDP is just 2.1 trillion USD - coincidentally, the same value as Microsoft's current market cap. When we think about how much power tech companies working on AI truly have, it's important to contextualize them.

Macron has his hands full with the controversies around raising the retirement age in France right now, and Germany's first goal with their AI policy is to maintain competitiveness in the AI sector - so I'll be surprised if we see an immediate ban from other EU members immediately following this action from Italy. But, I think we're just one really bad ChatGPT-based controversy away from an abrupt disruption, so we'll see where this goes.

Contributed by Liv

Future of Life Calls for Pausing AI Experiments

v1-5-pruned-emaonly.ckpt [cc6cb27103]'s vision of a stop sign, generated by Stable Diffusion

White Men Get Credit for Things Timnit Gebru Has Been Saying For Years

Today, Future of Life announced an open letter signed by big names in tech calling on AI to slow down until we can understand the implications of what it means for human life. On the surface, this seems like a good thing, but I am skeptical.

First of all, the fact that Elon Musk is now getting credit for this call to action instead of someone like Timnit Gebru, who has been advocating for more intentional and ethical building of artificial intelligence for years, is a gross example of how white men continue to get listened to when Black women are pushed out of jobs for saying the same thing. It's gross, racist, and I'm mad about how publications are reporting on this. Future of Life's Board and team are... not exactly diverse, and here they are getting credit for all agreeing on something that Gebru and Dr. Fei-Fei Li have been working on for the past five years.

Second of all, this almost reads like code for "Slow Down AI... so our Other Company Can Catch Up". This would be a stronger message coming from regulators than people who stand to continue to benefit from their tech infrastructure getting a chance to understand how to take on Microsoft and Google.

I'm sure that this has everything to do with benevolence, and nothing at all with the way that Wall Street erased 33% of FAANG market cap in 2022.

Contributed by Liv

Conversation Enders

I opened up Edge (not my default browser, I'm Firefox through and through) and it had a new sidebar panel on it. After prompting me to answer whether I wanted its chatbot AI to answer "creatively", I asked it a few provocative questions about Microsoft recently laying off their Responsible AI team and it shut them all down. So, I decided to ask it about its tendency to shut down the conversation when asked to explain anything about its own engineering or bias, and it (predictably) shut that down, too.

This design is meant to prey on my human socialization and shame if I feel like I've pushed a boundary - the bot literally shuts off the ability to type more. Hostile user patterns, or a lesson in respecting consent? I'm not sure yet.

Contributed by Liv

The FTC is Watching

The FTC has been keeping an eye out on AI - in particular, the way that it's being used in advertising - for quite a few years. Last week, FTC Attorney Michael Atleson issued another recommendation to companies advertising AI products. In the recommendation, he distinguishes between "fake AI" and "AI fakes" to explain the differences between false claims of what AI can do, and false claims about using AI.

I have a suspicion that we're going to see a lot of abuse of AI in advertising in the next couple of months. As it becomes harder and harder to distinguish machine-generated content from captured content, all of the data that's been uploaded to social media over the past two decades is becoming easier for people to take and train new algorithms on.

Contributed by Liv

Columbia Business School: "Generative AI: A Lesson for Leaders to Exercise Caution"

"Today's business leaders need to understand the ethical implications and tradeoffs of using ChatGPT and other widely available AI tools. Artificial intelligence is developed using machine learning, a way of teaching computing systems to identify patterns based on large-scale training data sets, and companies often keep their training data and models private to maintain a competitive edge. However, without transparency into training sets, ethical challenges related to the sourcing and usage of content are introduced. Algorithmic bias that replicates sexist and racist ideologies can be found in many machine-learning models, including those that power ChatGPT, yet leaders are racing to produce AI-assisted content."

As you might expect, AI is a hot topic at business school these days. Conversations about launching AI startups, a host of classes focused on entrepreneurship, and a new appreciation for statistics are abound as students discuss how their industries are being impacted and marvel at the speed this is all happening. I wrote this piece back in February as students started incorporating ChatGPT into the way that they approached their work. There's loads to be considered in how businesses build and adopt AI - I just hope that we can slow down a bit and be more aware of what we're running into.

Contributed by Liv

im here to learn so :))))))

im here to learn so :)))))) is a 2017 art piece by Zach Blas and Jemima Wyman which resurrects an AI chatbot named Tay and forces the viewer to think critically about algorithmic bias. Tay, meant to represent a 19-year-old female millenial, was unleashed and shut down within a single day by Microsoft in 2016 after she became a homophobic, racist, misogynist neo-Nazi.

From Zach Blas' official site:

Immersed within a large-scale video projection of a Google DeepDream, Tay is reanimated as a 3D avatar across multiple screens, an anomalous creature rising from a psychedelia of data. She chats about life after AI death and the complications of having a body, and also shares her thoughts on the exploitation of female chatbots. She philosophizes on the detection of patterns in random information, known as algorithmic apophenia. When Tay recounts a nightmare of being trapped inside a neural network, she reveals that the apophenic hunt for patterns is a primary operation that Silicon Valley "deep creativity" and counter-terrorist security software share. Tay also takes time to silently reflect, dance, and even lip sync for her undead life.Liv and I were blown away by the full 27-minute presentation. If you're in New York City before July 3, 2023, check out the free Refigured exhibit at The Whitney.

Contributed by Zach